Patients see potential benefits of AI in mental healthcare

In a recent survey conducted by the Columbia University School of Nursing, a plurality of participants revealed that they consider AI to be beneficial for mental healthcare, underscoring patients’ perspectives on the technology as it becomes more ubiquitous across the industry.

The research team emphasized that while AI adoption in healthcare is rapidly increasing, and multiple studies have sought to capture clinicians’ perspectives on the phenomenon, far fewer works have focused on patient perspectives.

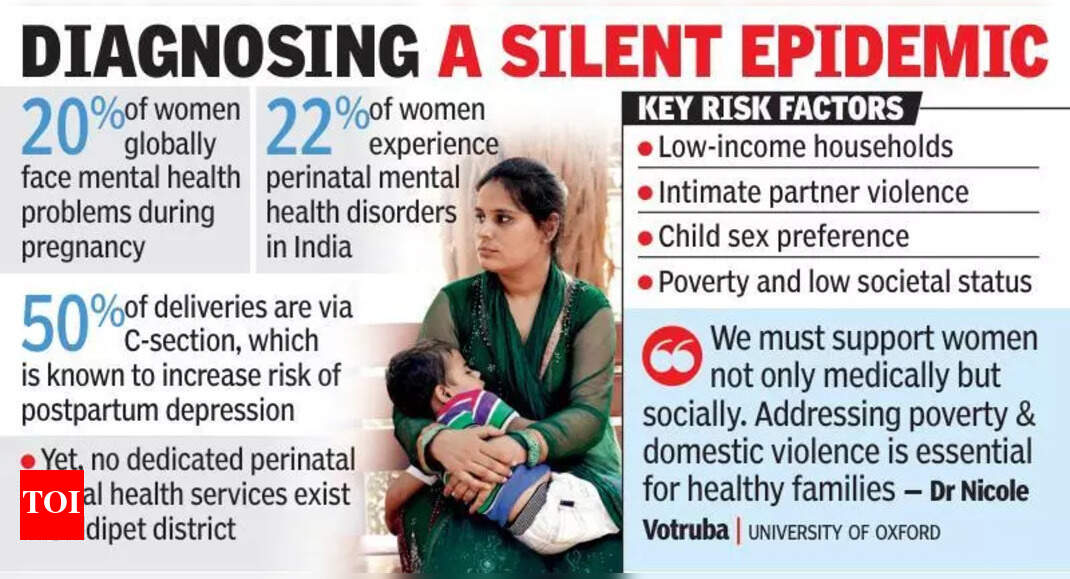

To date, studies exploring patients’ and the public’s attitudes toward healthcare AI have centered on what the researchers call somatic issues, such as perinatal health, radiology and general applications of the technology. Patient trust in healthcare AI has historically depended on the use case and clinicians’ support of the technology.

In the context of mental healthcare, patient feedback has been taken into account during the development of specific mental healthcare solutions, but more general investigations of the public’s view of mental healthcare AI are lacking.

The cross-sectional study surveyed a nationally representative cohort of 500 U.S. adults to understand public perceptions of the potential benefits, concerns, comfort and values around AI’s role in the mental healthcare space. Each participant was asked to provide structured and free-text responses to queries in each topic area.

The survey results demonstrated that 49.3% of respondents believe that AI is beneficial for mental healthcare, but these views differed based on sociodemographic characteristics.

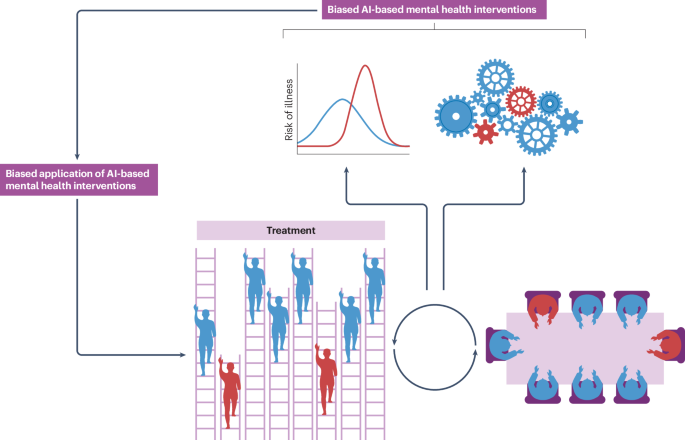

Black participants and those with lower self-reported health literacy were more likely to view AI as beneficial, whereas women were less likely to share that view.

Respondents’ concerns about the technology’s use were related to tool accuracy, incorrect diagnoses, the application of inappropriate treatments, confidentiality issues and a loss of connection with their mental healthcare provider.

In terms of patients’ AI-related values, 80.4% reported that they valued confidentiality, autonomy and being able to understand their individual mental health risk factors.

Further, 81.6% of participants indicated the healthcare provider would be responsible for a mental health misdiagnosis following the use of a clinical AI tool.

These findings led the researchers to conclude that future studies looking at the adoption of AI in mental healthcare should explore strategies to effectively communicate a tool’s accuracy to patients, factors that drive individual mental health risk and confidential data use approaches.

The researchers underscored that these are key to ensuring that patients and their providers can determine when AI use might be beneficial and prioritize the preservation of patient-provider trust.

“This survey comes at a time where AI applications are ubiquitous, and patients are gaining greater access and ownership over their data. Understanding patient perceptions of if and how AI may be appropriately used for mental health care is critical,” noted study lead Natalie Benda, Ph.D., an assistant professor of health informatics at Columbia University School of Nursing, in a press release. “Our findings can support health professionals in deploying AI tools safely.”

Shania Kennedy has been covering news related to health IT and analytics since 2022.

link